Introduction

The social media platform X, formerly Twitter, has long grappled with the pervasive issues of fake accounts and astroturfing—coordinated efforts to manipulate public opinion by mimicking grassroots support. These challenges, deeply rooted in Twitter’s pre-2022 era, have drawn scrutiny for undermining authentic discourse. Since Elon Musk’s acquisition of the platform in October 2022, X has implemented measures to address these problems, with mixed results. This article explores the progress X has made in countering fake accounts and astroturfing as of April 2025, provides background on the inherited issues from Twitter, and examines allegations of collusion involving Twitter’s former staff and owners.

Background: The Inherited Problem of Fake Accounts and Astroturfing

When Twitter operated under its original ownership, fake accounts and astroturfing were significant concerns, driven by the platform’s open nature and limited moderation resources. Studies from 2018 to 2022 estimated that 5-15% of Twitter’s accounts were bots or fake, often used to amplify political narratives, spread disinformation, or inflate follower counts for commercial gain. Astroturfing, defined as orchestrated campaigns posing as organic public support, was particularly problematic during elections and major global events.

Notable examples include:

- 2016 U.S. Election: Russian-linked accounts used bots to amplify divisive content, reaching millions of users. The scale of this interference, later detailed in U.S. investigations, exposed Twitter’s inadequate detection systems.

- South Korean Election (2012): A National Information Service campaign deployed coordinated accounts to sway public opinion, revealing how astroturfing could exploit platform vulnerabilities.

- Corporate Manipulation: Brands and PR firms frequently used sockpuppet accounts to post fake reviews or attack competitors, undermining trust in online discourse.

Twitter’s pre-2022 moderation relied on a combination of automated tools and human review, but its trust and safety teams were often overwhelmed. The platform’s Community Notes feature, introduced in 2021, aimed to crowdsource fact-checking, but its effectiveness was limited by slow adoption and inconsistent enforcement. By the time of Musk’s acquisition, Twitter’s reputation as a “cultural barometer” was tainted by its role as a conduit for misinformation, with critics arguing that its policies prioritized engagement over authenticity.

Allegations of Collusion with Twitter’s Former Staff and Owners

Concerns about collusion between Twitter’s pre-2022 staff, leadership, or owners and external entities have surfaced, particularly in the context of content moderation and account management. While no definitive evidence of systemic collusion has been confirmed, several findings and allegations have fueled speculation:

- Twitter Files (2022-2023): Internal documents released post-acquisition, dubbed the “Twitter Files,” suggested that Twitter’s former leadership engaged in selective content moderation, allegedly at the behest of government agencies or political groups. These documents, shared by journalists like Matt Taibbi, pointed to instances where accounts were shadowbanned or suppressed based on political leanings, though the extent of external influence remains debated. Critics argue this reflects bias rather than collusion, while supporters of the claims see it as evidence of coordinated censorship.

- Amuse Account Case: The anonymous X account “Amuse,” linked to conservative pundit Alexander Muse, was permanently suspended in 2021 for sharing content deemed problematic by Twitter’s moderation team. Muse claimed this was due to activist pressure from groups like Black Lives Matter, suggesting targeted deplatforming by Twitter staff sympathetic to certain causes. However, no concrete evidence links this to collusion with Twitter’s owners.

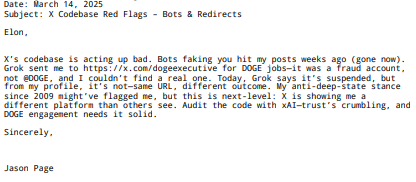

- Government Influence: A 2022 Substack post by Amuse alleged that Twitter operated a “modern-day Star Chamber” to silence voices at the government’s request, citing the suspension of journalist Paul Sperry. While these claims resonate with some X users, they lack corroboration from primary sources and are considered inconclusive.

Overall, while the Twitter Files and related allegations highlight inconsistencies in pre-2022 moderation, they fall short of proving widespread collusion between Twitter’s staff, owners, and external actors. The platform’s challenges were more likely a result of underfunded moderation, competing priorities, and the inherent difficulty of policing a global platform.

X’s Progress in Countering Fake Accounts and Astroturfing (2022–2025)

Since Musk’s takeover, X has prioritized reducing fake accounts and astroturfing, with a focus on transparency and technological improvements. Key efforts and their outcomes as of April 2025 include:

- Mass Account Suspensions:

- X reported suspending 460 million accounts in the first half of 2024 and 335 million in the second half for violating its Platform Manipulation and Spam policy. These actions targeted bots, spam accounts, and coordinated inauthentic behavior, such as accounts promoting Azerbaijan’s COP29 narrative.

- Impact: While these numbers suggest aggressive enforcement, critics note that fake accounts persist, with a 2024 Cyabra study estimating 15% of pro-Trump accounts and 7% of pro-Biden accounts were inauthentic. The sheer volume of suspensions indicates progress but also underscores the scale of the problem.

- Policy Updates:

- X introduced stricter rules requiring parody and fan accounts to explicitly label themselves as such, reducing impersonation risks.

- The platform expanded its Community Notes feature, allowing users to fact-check misleading posts. However, a 2024 Center for Countering Digital Hate (CCDH) report found that 74% of 283 misleading election-related posts lacked visible Community Notes, suggesting limited effectiveness.

- Algorithmic and Detection Improvements:

- X has invested in AI-driven tools to detect coordinated inauthentic behavior, building on lessons from cases like the 2012 South Korean campaign, where account coordination was a key indicator.

- Despite these efforts, a 2024 Global Witness investigation identified 182 suspicious accounts inflating Azerbaijan’s COP29 image, indicating that sophisticated astroturfing campaigns can still evade detection.

- Transparency Initiatives:

- In September 2024, X released its first transparency report since Musk’s takeover, detailing enforcement actions and policy changes. The report emphasized a “rethought” approach to data sharing, driven by a desire for openness rather than regulatory pressure.

- X spokesperson Michael Abboud stated, “Transparency is at the core of what we do at X,” highlighting the platform’s commitment to public accountability.

Challenges and Criticisms

Despite these efforts, X faces ongoing challenges:

- Reduced Moderation Capacity: Musk’s decision to cut 80% of Twitter’s staff, including trust and safety teams, has strained moderation efforts. The remaining team of approximately 20 staff (down from 230) struggles to handle the volume of content.

- Persistent Astroturfing: Coordinated campaigns, such as those during the 2024 U.S. election and COP29, show that astroturfing remains a problem. A November 2024 DW report confirmed new accounts continued manipulative tactics, exploiting X’s reduced oversight.

- Misinformation Amplification: Musk’s own posts, viewed billions of times, have been criticized for spreading false claims, undermining X’s anti-misinformation efforts. A CCDH report noted 87 misleading election-related posts by Musk in 2024, amassing 2 billion views.

- User Exodus: X’s user base has declined by 30% in the U.S. since 2022, partly due to concerns over misinformation and bot activity, reducing its political influence but complicating efforts to maintain a vibrant, authentic community.

Skeptical Perspective

X’s progress in countering fake accounts and astroturfing is notable but incomplete. The platform’s aggressive suspensions and policy updates show intent, but the persistence of sophisticated campaigns suggests that current measures are reactive rather than preventive. Astroturfing thrives in environments where moderation is underfunded and algorithms prioritize engagement over authenticity—conditions that X has not fully addressed. The inherited issues from Twitter, while exacerbated by lax pre-2022 moderation, were not necessarily the result of collusion but rather systemic inefficiencies. Allegations of staff or owner collusion remain speculative, lacking the evidence needed to shift the narrative from mismanagement to malice.

Conclusion

X has made strides in tackling fake accounts and astroturfing since 2022, with significant account suspensions, policy reforms, and transparency efforts marking a departure from Twitter’s approach. However, the inherited challenges—rooted in Twitter’s struggle to balance free expression with moderation—persist, compounded by reduced staff and the platform’s evolving role in political discourse. While allegations of collusion with Twitter’s former staff or owners add intrigue, they lack conclusive evidence and distract from the broader issue: building a platform resilient to manipulation. For X to succeed, it must invest in proactive detection, rebuild moderation capacity, and align its algorithms with authenticity over virality. As of April 2025, X’s journey toward a cleaner, more trustworthy platform remains a work in progress.

![]()