Introduction

In an age where online discourse has become the lifeblood of public debate, the concept of “shadow banning” has risen to the forefront of conversations about transparency, free speech, and digital ethics. Shadow banning refers to the practice of limiting the visibility of a user’s content—often without the user’s knowledge—on platforms such as search engines (Google, DuckDuckGo), video streaming services (YouTube, Bitchute, Rumble), and social media channels (Facebook, Twitter, Instagram, and others).

While platform providers often assert that this practice is designed to combat spam or abusive content, critics argue that it can also be used to suppress certain political views or individuals. This article explores the realities of shadow banning across major digital platforms, examining notable case studies and legal actions. In doing so, we also introduce a novel alternative called “Common Grounds,” developed by Jason Page of Page Telegram, which aims to bring a fresh approach to online discourse.

Understanding Shadow Banning

What is Shadow Banning?

Shadow banning occurs when a platform deliberately reduces or removes the visibility of a user’s content to the broader audience, typically without issuing an explicit ban or notifying the user. From the user’s perspective, they can still post and interact with the site, but their content may no longer appear in public feeds, search results, or other indexes.

This practice differs from a traditional ban, which is overt and clearly communicated. In contrast, shadow banning is subtle, making it difficult for users—or their followers—to realize that content is being effectively muted.

Why Do Platforms Implement Shadow Banning?

- Spam and Misuse Prevention: Platforms like Twitter and Facebook historically used shadow banning to limit spam accounts, bots, and abusive behavior.

- Brand Safety and Advertiser Considerations: Many platforms seek to maintain a specific environment that appeals to advertisers, potentially limiting the reach of content they deem “controversial.”

- Community Guidelines: Content that violates or skirts the edges of terms of service—such as hateful or inflammatory speech—may be downranked in order to encourage civil discourse and reduce legal exposure.

- Government Sanctions Against Free Speech as “Disinfo”: Content that becomes difficult to search to impossible to find unless the creator searches for it when logged into their account giving them the illusion of accessibility; from identifying controversial keywords such as articles on “The Hutchisons Effect,” or news on “Exotic Weapon Technologies” and “Power Transmission via RF,” can all potential involke shadow banning on a persons page using such public access services / platforms.

Search Engine Perspectives: Google vs. DuckDuckGo

Google’s algorithms are opaque and extremely sophisticated. Accusations of shadow banning typically involve claims that Google’s search ranking algorithms disproportionately hide or downrank certain topics. Although Google denies systematic political bias, critics have pointed to internal documents suggesting various forms of algorithmic filtering.

DuckDuckGo

DuckDuckGo, a privacy-focused search engine, promotes itself as offering neutral search results without extensive tracking or personalization. Although any ranking system carries some degree of bias, DuckDuckGo is generally perceived to be less prone to allegations of deliberate political filtering when compared to Google.

Video Platform Policies: YouTube vs. Bitchute vs. Rumble

YouTube

As the world’s largest video platform, YouTube enforces strict community guidelines. It is known to demonetize or downrank content considered harmful, controversial, or in violation of policy. Critics argue these measures can devolve into shadow banning, where content is not officially removed but is rendered difficult to discover through algorithmic suppression.

Bitchute and Rumble

Bitchute and Rumble emerged as alternatives for creators who felt marginalized by YouTube’s more stringent moderation. These platforms have more lenient content policies but smaller user bases, thus sometimes providing less reach. Nonetheless, many users appreciate the freer exchange of ideas, even if they do so within smaller communities.

Popular Social Media: Content Visibility and Legal Ramifications

On major social media services such as Facebook, Twitter, Instagram, and TikTok, shadow banning accusations are common. One high-profile example was Twitter’s 2018 “quality filter” (Conger, 2018), which critics claimed disproportionately impacted conservative accounts. Twitter denied political bias, describing the practice as a broad method to deter spam and toxic content.

Legal actions have occasionally been pursued by individuals or organizations who argue that shadow banning violates rights to free expression. However, due to Section 230 of the U.S. Communications Decency Act, platforms have broad discretion in moderating user-generated content. This typically makes it difficult for plaintiffs to prevail in court.

Case Studies

- Project Veritas vs. Social Platforms (2019–2021): Investigative group Project Veritas released undercover videos they claimed exposed internal bias. Critics argued the evidence was selectively edited, but it reignited debates on how deeply tech companies manipulate content visibility (Molla, 2020).

- Conservative Bloggers vs. Facebook (2018): Several conservative bloggers accused Facebook of significantly reducing their page reach via algorithm changes. Although Facebook admitted to prioritizing personal connections in user feeds, critics suspected political motivations (Hern, 2018).

- Rumble’s Antitrust Lawsuit Against Google (2021): Rumble sued Google, alleging unfair preference for YouTube in search results. Though not strictly a “shadow ban” scenario, it highlighted concerns about search bias and platform dominance (Feiner, 2021).

Emerging Alternatives and “Common Grounds” by Jason Page

As frustrations mount over perceived bias, a wave of alternative or decentralized platforms—such as Mastodon, Gab, Parler—have taken root. However, many of these communities become echo chambers for specific ideological positions, hampering their ability to foster genuine cross-spectrum dialogue.

“Common Grounds”: A Novel Approach

Due for release on or around February 14, 2025, “Common Grounds”—developed by Jason Page of Page Telegram—aims to be a platform that transcends identity-based political segregation. By adopting innovative moderation and content format strategies, it aspires to promote balanced debate and genuine discourse among users of different backgrounds and viewpoints.

Key Features of Common Grounds

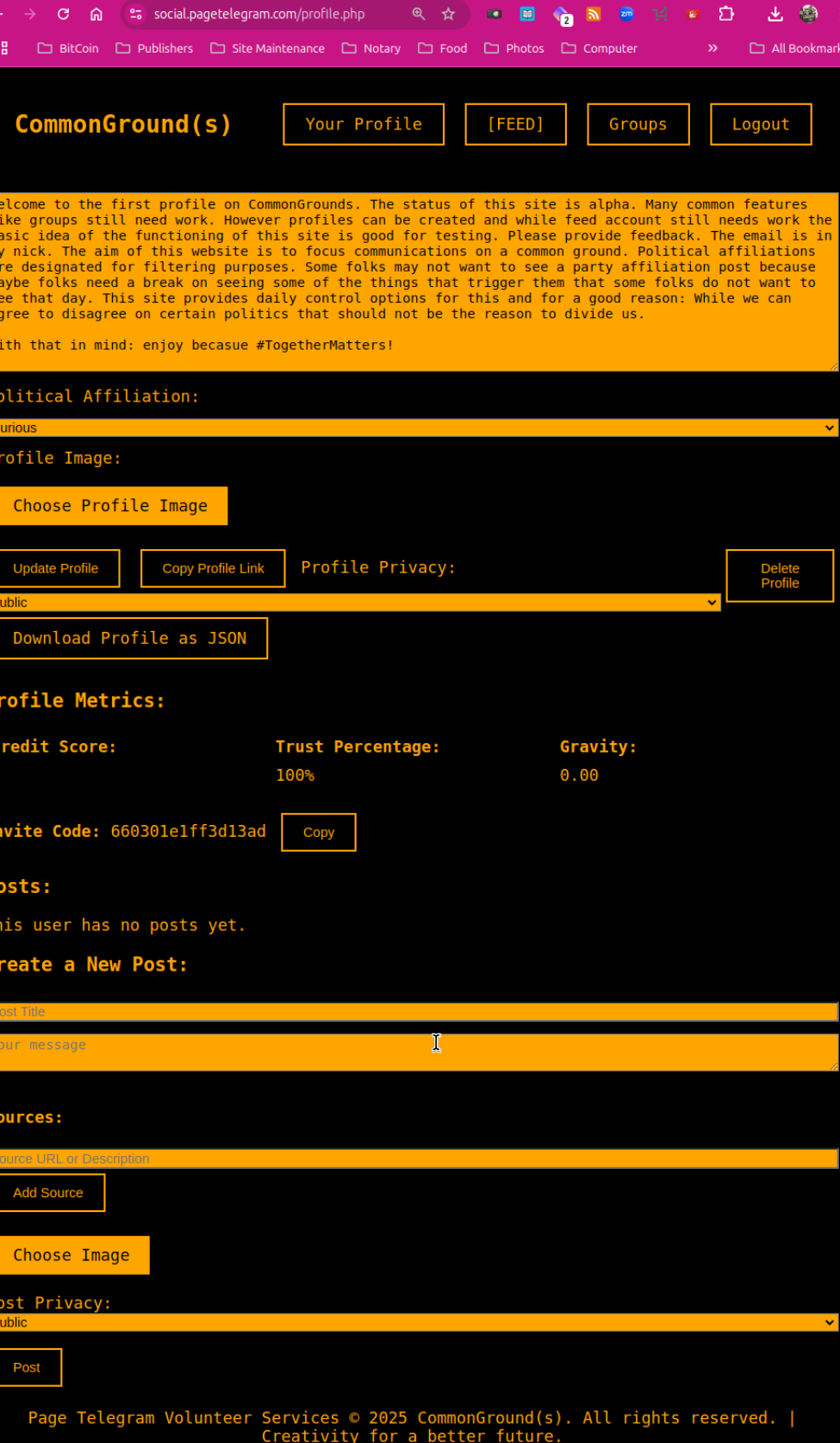

- Consensual Invitations: Users join by receiving invites from current members in a manner designed to diversify the user base rather than restrict it. This approach seeks to encourage civil dialogue across varied perspectives.

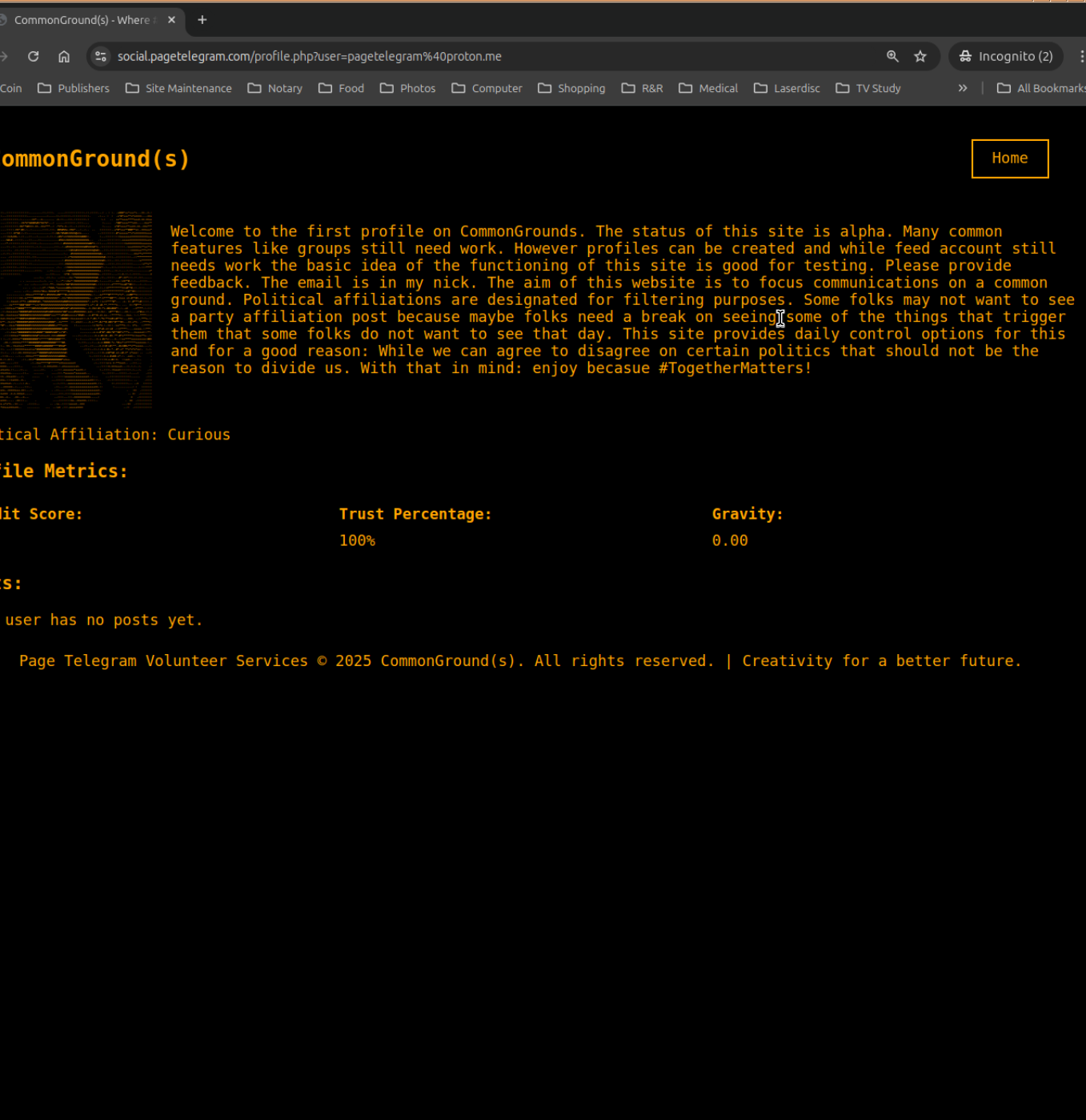

- Text-Only Platform: Unlike conventional social media platforms, Common Grounds does not host images in their native format. Instead, uploaded images (for posts, groups, or even profile pictures) are automatically converted to ASCII art. This design choice offers an “impression” of the original image while preserving the platform’s text-centric philosophy.

- Transparent Moderation Policies: Moderation decisions are community-driven and openly documented. Users can review and discuss flagged or removed content, ensuring accountability.

- RSS Feeds for Public Profiles and Groups: Any public-facing content, profile, or group can be accessed through an RSS feed, allowing users to stay updated without relying on platform-specific notifications.

- JSON Import for Facebook Profiles: In an upcoming feature, users will be able to import a text-only version of their Facebook profile via JSON format backup through Facebook’s process, allowing them to “transplant” their social media footprint without carrying over graphics or personal images. This ensures the continuity of one’s social history while respecting the text-based ethos of Common Grounds.

Potential for Success and Challenges

Success Factors: The platform’s commitment to user-managed moderation, text-centric design and transparency may resonate with those seeking an alternative to mainstream social media. Moreover, RSS feeds and straightforward JSON imports provide additional flexibility and data ownership, which could appeal to privacy-conscious users.

Challenges: Competing with well-established networks will require a critical mass of adopters and persuasive messaging. Additionally, maintaining balanced moderation poses a perpetual challenge. Overly lenient policies risk enabling harmful content, whereas heavy-handed moderation can alienate those looking for an open forum.

Conclusion

Shadow banning remains a contentious element of online content moderation, as platforms try to balance free expression, user experience and community standards. While Google, YouTube, Facebook, X and others continue to be scrutinized for alleged hidden bias, new ventures like Bitchute, Rumble and niche social networks have sought to fill perceived gaps in the market, many of which have contributed to misconceptions resulting in a divided public.

Enter “Common Grounds,” a text-only, transparent, and community-driven platform that is poised to challenge the entrenched norms of digital communication. By leaning into ASCII art conversions, RSS feeds, and JSON imports, it presents a refreshing, minimalist take on social networking. Whether it can amass a robust, diverse user base and maintain a fair moderation system could decide if it truly becomes the go-to alternative for free and open discourse in the years ahead.

References:

https://gizmodo.com

https://www.cnbc.com

https://www.theguardian.com

https://www.vox.com

https://www.projectveritas.com

![]()